Tech CEOs are increasingly vocal about the next frontier for data centers: space. Google’s Sundar Pichai recently quipped about placing an AI chip “somewhere in space” by 2027, even joking about a potential encounter with the Tesla Roadster. This isn’t just idle chatter; it signals a serious exploration of off-world infrastructure.

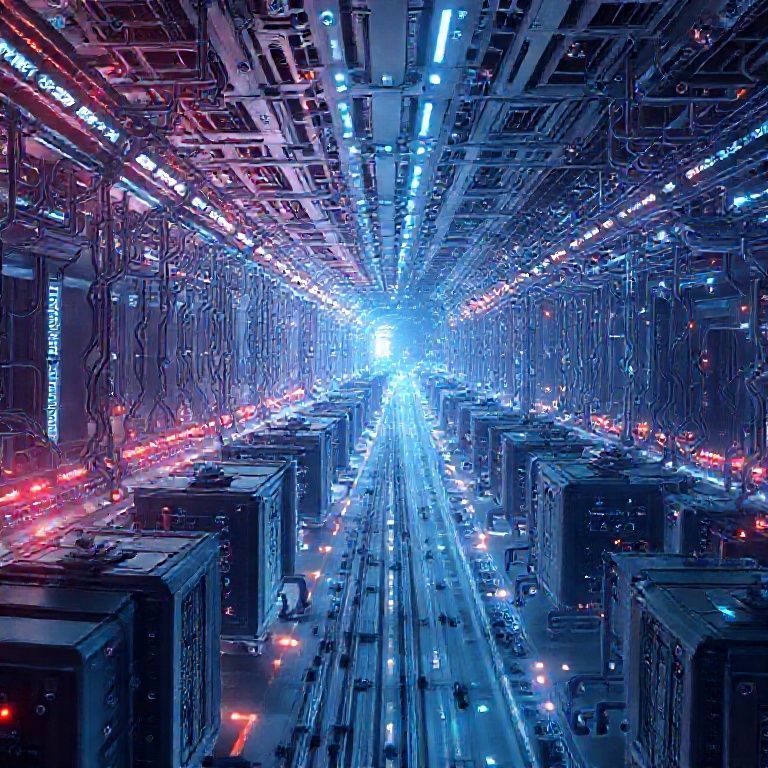

The motivations are multifaceted. Space-based data centers could offer unparalleled cooling efficiency, tapping into the frigid vacuum. Reduced latency for global communications is another key driver, particularly for applications like real-time financial trading. Furthermore, the unique environment could enable novel computing architectures resistant to terrestrial disruptions.

The implications are vast. A successful deployment would revolutionize cloud computing, potentially shifting the balance of power among providers. It would also spur innovation in space technology, driving down costs and accelerating the commercialization of space. Imagine a future where Earth-bound regulations and resource constraints are less of a factor in technological advancement.

However, significant hurdles remain. The cost of launching and maintaining equipment in space is substantial. Power generation, data transmission, and radiation shielding pose major engineering challenges. International regulations and space debris mitigation are also critical considerations that need careful attention.

Ultimately, the push for data centers in space reflects a relentless pursuit of performance and efficiency. While widespread adoption is years away, the ambitions of tech leaders suggest that cosmic compute is no longer a science fiction fantasy, but a tangible goal. The next decade will be crucial in determining whether this vision becomes a reality.